| Version 8 (modified by bennylp, 17 years ago) (diff) |

|---|

Understanding Media Flow

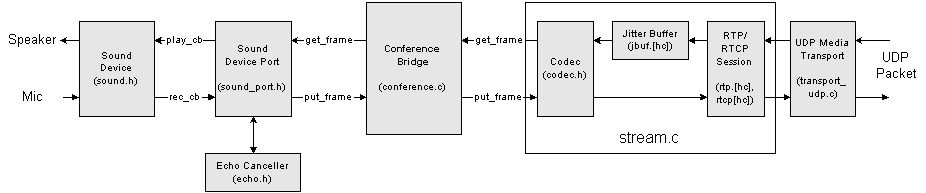

The diagram below shows the media interconnection of multiple parts in a typical call:

Media Interconnection

The main building blocks for above diagram are the following components:

- a Sound Device Port which is a thin wrapper for the Sound Device Abstraction to translate sound device's rec_cb()/play_cb() callbacks into call to media port's pjmedia_port_put_frame()/pjmedia_port_get_frame().

- a Conference Bridge,

- a Media Stream that is created for the call,

- a Media Transport that is attached to the Media Stream to receive/transmit RTP/RTCP packets.

Setting up Media Interconnection

The media interconnection above would be set up as follows:

- during application initialization, application creates the Sound Device Port and the Conference Bridge. These two objects would normally remain throughout the life time of the application.

- when making outgoing call or receiving incoming call, application would create a media transport instance (normally it's an UDP Media Transport). From this media transport, application can put its listening address and port number in the local SDP to be given to the INVITE session.

- when the offer/answer session in the call is established (and application's on_media_update() callback is called), application creates a Media Session Info from both local and remote SDP found in the INVITE session.

- from the Media Session Info above, application creates a Media Session, specifying also the Media Transport created earlier. This process will create Media Streams according to the codec settings and other parameters in the Media Session Info, and also establish connection between the Media Stream and the Media Transport.

- application retrieve the Media (Audio) Stream from the Media Session, and register this Media Stream to the conference bridge.

- application connects the Media Stream slot in the bridge to other slots such as slot zero which normally is connected to the sound device.

Media Timing

The whole media flow is driven by timing of the sound device, especially the playback callback.

The Main Flow (Playback Callback)

- when the sound device needs another frame to be played to the speaker, the sound device abstraction will call play_cb() callback that was registered to the sound device when it was created.

- the sound device port translates this play_cb() callback into pjmedia_port_get_frame() call to its downstream port, which in this case is a Conference Bridge.

- a pjmedia_port_get_frame() call to the conference bridge will trigger it to call another pjmedia_port_get_frame() for all ports in the conference bridge, mix the signal together where necessary, and deliver the mixed signal by calling pjmedia_port_put_frame() again for all ports in the bridge. After the bridge finishes processing all of these, it will then return the mixed signal for slot zero back to the original pjmedia_port_get_frame() call, which then will be processed by the sound device.

- a pjmedia_port_get_frame() call by conference bridge to a media stream port will cause it to pick one frame from the jitter buffer, decode the frame using the configured codec (or apply Packet Lost Concealment/PLC if frame is lost), and return the PCM frame to the caller. Note that the jitter buffer is filled-in by other flow (the flow that polls the network sockets), and will be described in later section below.

- a pjmedia_port_put_frame() call by conference bridge to a media stream port will cause it to encode the PCM frame with a codec that was configured to the stream, pack it into RTP packet with its RTP session, update RTCP session, schedule RTCP transmission, and deliver the RTP/RTCP packets to the underlying media transport that was previously attached to the stream. The media transport then sends the RTP/RTCP packet to the network.

Recording Callback

The above flow only describes the flow in one direction, i.e. to the speaker device. But what about the audio flow coming from the microphone?

- When the input sound (microphone) device has finished capturing one audio frame, it will report this even by calling '''rec_cb()''' callback function which was registered to the sound device when it was created.

- The sound device port translates the rec_cb() callback into pjmedia_port_put_frame() call to its downstream port, which in this case is a conference bridge.

- When pjmedia_port_put_frame() function is called to the conference bridge, the bridge will just store the PCM frame to an internal buffer (a frame queue). This stored buffer will then be picked up by the main flow (the pjmedia_port_get_frame() call to the bridge above) when the bridge collects frames from all ports and mix the signal.

Potential Problem:

Ideally, rec_cb() and play_cb() should be called one after another, interchangeably, by the sound device. But unfortunately this is not always the case; in many low-end sound cards, it is quite common to have several consecutive rec_cb() callbacks called and then followed by several consecutive play_cb() calls. To accomodate this behavior, the internal sound device queue buffer in the conference bridge is made large enough to store eight audio frames, and this is controlled by RX_BUF_COUNT macro in conference.c. It is possible that a very very bad sound device may issue more than eight consecutive rec_cb()/play_cb() calls, which in this case it would be necessary to enlarge the RX_BUF_COUNT number.

Incoming RTP/RTCP Packets

Incoming RTP/RTCP packets is not driven by any of the flow above, but rather by different flow ("thread"), that is the flow/thread that polls the socket descriptors.

The standard implementation of UDP Media Transport in PJMEDIA will register the RTP and RTCP sockets to an IOQueue (one can view IOQueue as an abstraction for select(), although IOQueue is more of a Proactor pattern). Multiple instances of media transports will be registered to one IOQueue, so that a single poll to the ioqueue will check the incoming packets on all media transports.

Application can choose different strategy with regard to placing the ioqueue instance:

- Application can instruct the Media Endpoint to instantiate an internal IOQueue and start one or some worker thread(s) to poll this IOQueue. This probably is the recommended strategy so that polling to media sockets is done by separate thread (and this is the default settings in PJSUA-API).

- Alternatively, application can use a single IOQueue for both SIP and media sockets (well basically for all network sockets in the application), and poll the whole thing from a single thread, possibly the main thread. To use this, application will specify the IOQueue instance to be used when creating the media endpoint and disable worker thread. This strategy will probably work best on a small-footprint devices to reduce number of threads in the system.

The flow of incoming RTP packets are like this:

- an internal worker thread in the Media Endpoint polls the IOQueue where all media transports are registered to.

- when an incoming packet arrives, the poll function will trigger on_rx_rtp() callback of the UDP media transport to be called. This callback was previously registered by the UDP media transport to the ioqueue.

- the on_rx_rtp() callback reports the incoming RTP packet to the media stream port. The media stream was attached to the UDP media transport during session initialization by application.

- the media stream unpacks the RTP packet using its internal RTP session, update RX statistics, de-frame the payload according to the codec being used (there can be multiple audio frames in a single RTP packet), and put the frames into the jitter buffer.

- the processing of incoming packets stops here, as the frames in the jitter buffer will be picked up by the main flow (a call to pjmedia_port_get_frame() to the media stream.

![(please configure the [header_logo] section in trac.ini)](/repos/chrome/site/pj.jpg)